Rent a Human: When AI Decides You Need a Person

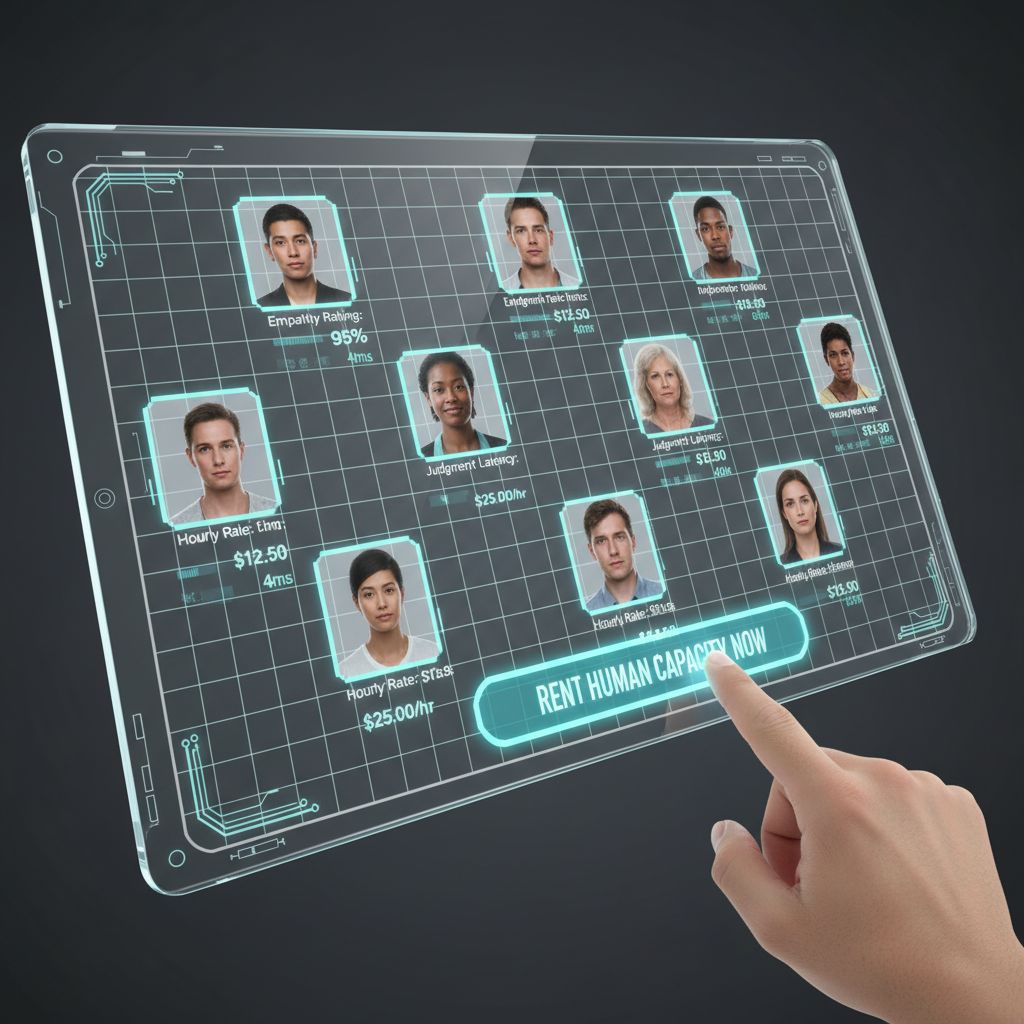

A new website is making the rounds in tech and marketing circles: rentahuman.ai. The premise is simple and unsettling at the same time. Instead of outsourcing tasks to software, you rent an actual human to perform work that AI either cannot do well or should not do at all.

At first glance, it feels like satire. A tongue in cheek reversal of the last decade of automation hype. But it is real, and people are using it. Which means it deserves serious attention.

This is not just another quirky startup. It is a signal. A sign that we are entering a phase where the question is no longer “Can AI do this?” but “Should it?”

Let’s talk about why this idea could be dangerous, what unintended consequences might follow, and why, despite all of that, it could also lead to some genuinely positive outcomes.

What “Rent a Human” Really Represents

The website frames itself as a solution for moments when AI fails. You need nuance. Judgment. Empathy. Taste. Context. So you bring in a person.

That framing matters.

For years, humans have been the default and machines were the add on. Now the default is AI, and humans are the exception. A premium service. A fallback.

That reversal should make all of us pause.

When we say “rent a human,” we are not just describing a transaction. We are redefining the role of people in a digital economy where software is assumed to be faster, cheaper, and good enough for most things.

The danger starts there.

The Risk of Turning Humans Into Features

One of the biggest risks is subtle but profound. Language shapes behavior. When people are described the same way we describe tools, we start treating them like tools.

“Rent a human” sounds efficient. Modular. Replaceable.

That mindset can easily bleed into how workers are valued. If humans are just the layer you add when automation fails, then their labor is framed as a cost rather than a contribution. Something to minimize. Something temporary.

This is not new, but AI accelerates it. Gig work already reduced many jobs to tasks. AI reduces them further to exceptions.

The unintended consequence is a workforce that feels optional.

A New Kind of Dehumanization

Automation has always displaced jobs, but it also created new ones. What is different now is how directly AI competes with cognitive work that used to define professional identity.

Writers. Designers. Analysts. Strategists. Customer support. Educators.

When AI is positioned as the default and humans as the backup, it sends a clear message about perceived value.

People are no longer the thinkers. They are the edge cases.

That can lead to a quiet erosion of dignity at work. You are not hired because you are skilled. You are hired because the machine failed.

That psychological shift matters. It changes how people see themselves and how organizations justify compensation, respect, and long term investment in human talent.

The Race to the Bottom Problem

Another danger is economic.

If humans are brought in only when AI cannot perform a task, those tasks will be increasingly narrow and fragmented. Short bursts of work. High urgency. Low leverage.

That creates downward pressure on pay.

Why pay well when the job is framed as “just the thing AI couldn’t quite do yet”? Especially when there is an assumption that the machine will improve soon.

This could create a race to the bottom where human labor is undervalued precisely because it exists in the shadow of automation.

Ironically, the more complex and human the task, the harder it becomes to justify stable, well paid roles for it.

Accountability Gets Murky Fast

Here is a practical concern that does not get enough attention.

When AI makes a mistake, responsibility is already fuzzy. Is it the developer? The company? The user?

Now add rented humans into the loop.

If a human is brought in to approve, tweak, or finalize an AI output, who is responsible when something goes wrong? The human? The platform? The AI system that framed the options?

This hybrid workflow sounds sensible, but it can also be a way to offload liability onto individuals who have very little power.

That is not hypothetical. We have seen it in content moderation, logistics, and customer support. Humans are placed between the machine and the outcome, absorbing blame without control.

Normalizing Surveillance and Micromanagement

To make a service like this efficient, platforms will want metrics. Speed. Accuracy. Response time. Error rates.

That invites intense monitoring.

Humans become components in a system optimized for machine performance. Every action logged. Every delay measured. Every deviation flagged.

This is already happening in many workplaces, but framing humans as rentals rather than employees makes it easier to justify invasive oversight.

After all, you are just renting capacity. Not trusting judgment.

The Ethical Shortcut Problem

There is another risk that is easy to miss.

Renting humans can become a way to avoid hard ethical decisions.

Instead of asking whether a task should exist at all, companies may simply route it to a human and move on. Moderating disturbing content. Making borderline decisions. Delivering bad news. Approving questionable outcomes.

The human becomes a moral buffer.

This can allow organizations to claim ethical responsibility without actually changing the system that creates harm in the first place.

Now the Other Side: Why This Could Be a Good Thing

So far, this all sounds bleak. But dismissing the idea outright would be a mistake.

There is something honest embedded in this model.

It acknowledges that AI is not enough.

For years, marketing copy and tech demos have pretended otherwise. Everything is automated. Everything is solved. Humans are slow and messy.

Rent a human flips that narrative, even if awkwardly. It says there are limits. There are moments where judgment, empathy, and lived experience matter.

That is an important admission.

Revaluing Human Skills That Were Taken for Granted

If done well, this kind of service could actually spotlight the value of deeply human skills.

Not tasks. Skills.

Listening. Sense making. Ethical reasoning. Cultural awareness. Taste. Creativity that is shaped by lived experience rather than pattern matching.

These are things AI imitates but does not truly possess.

By making human involvement explicit, we may finally get more honest conversations about what machines can and cannot replace.

That could lead to better system design, where AI handles scale and speed, and humans are intentionally placed where meaning and responsibility live.

A Chance to Design Better Human in the Loop Systems

Most human in the loop systems today are poorly designed. Humans are overworked, underpaid, and treated as safety nets.

A platform that openly centers humans could do better, if it chooses to.

That means clear authority. Real decision making power. Fair compensation. Transparency about how human input is used.

It also means designing workflows that respect human limits rather than pushing people to match machine speed.

If we are serious about responsible AI, this is exactly the kind of design challenge we need to face.

Making Invisible Labor Visible

One positive unintended consequence could be visibility.

Right now, a huge amount of human labor sits behind AI systems, invisible to users. Labeling data. Reviewing outputs. Fixing errors. Handling edge cases.

By openly renting humans, that labor becomes visible. You see the cost. You see the dependency.

That could change public perception. AI stops looking like magic and starts looking like infrastructure that depends on people.

Visibility is often the first step toward accountability.

A New Category of Human Expertise

There is also an opportunity to create a new kind of professional identity.

Not just “person who fixes AI mistakes,” but specialists in human judgment.

People who understand how to work with AI systems, when to trust them, when to override them, and how to explain decisions clearly.

That is a real skill set, and one we will need more of, not less.

If platforms invest in training and recognition rather than treating humans as disposable, this could become a respected role.

The Real Question Is Not the Website

The real issue is not rentahuman.ai itself.

The real issue is the future it hints at.

A future where AI is assumed, and humanity is optional. A future where people are brought in only when systems fail, rather than because people matter.

We still have a choice.

We can design systems where humans are partners, not patches. Where human judgment is valued, not rented by the hour. Where efficiency does not erase dignity.

Or we can slide into a world where the most human parts of work are treated as bugs.

Rent a human is a mirror. What we see in it depends on what we choose to build next.

The danger is real. So is the opportunity.

The difference will come down to whether we remember that humans are not a feature. They are the point.